Google has recently launched its new language model, Gemini This next-generation AI model is designed to understand and operate across different types of information It is currently integrated with Google’s chatbot Bard and is speculated to be more flexible than GPT4.

What is Gemini?

Gemini is Google’s next-generation foundation model. It follows on from PaLM 2, the current AI model behind the likes of Google’s Bard chatbot and other recently announced features. Gemini is a multimodal language model designed to understand and generate text, images, and other media. It is trained on a dataset of text, images, and code, and it can be used for tasks such as generating realistic images, translating languages, and writing different kinds of creative content.

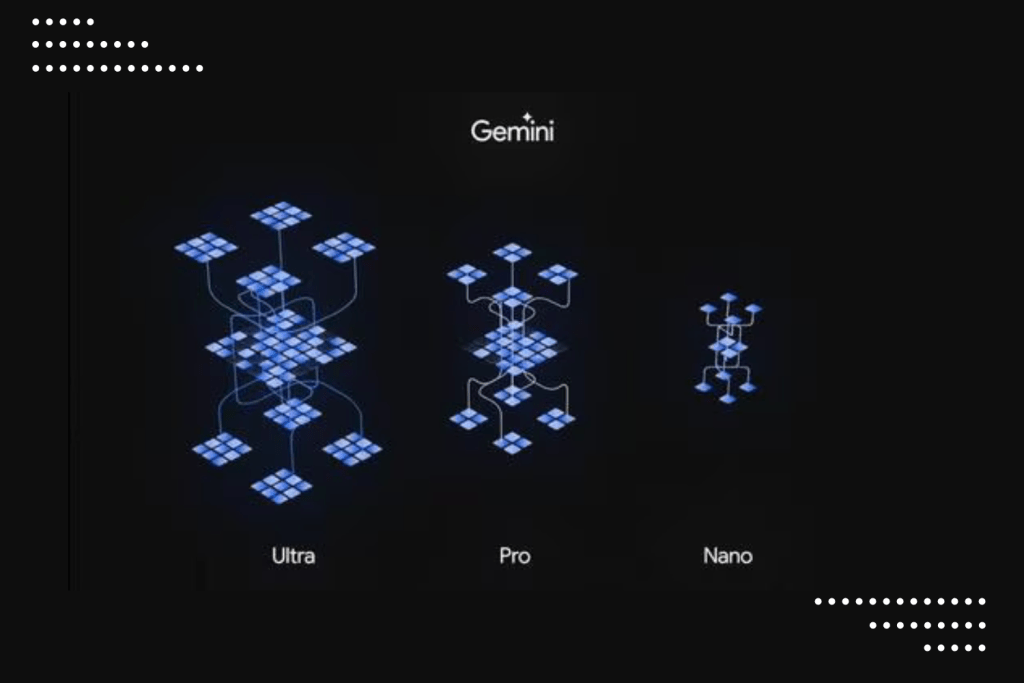

The three sizes of Gemini

- Gemini Nano: Google has developed the Gemini Nano, which is a lightweight AI model designed specifically for mobile devices, particularly the Pixel 8. This model is very efficient and allows on-device tasks to be performed seamlessly, even when offline. The Gemini Nano is capable of suggesting relevant replies in chat apps and summarising text content, delivering powerful AI capabilities without the need for external servers. This ensures a smooth and responsive user experience for Pixel 8 users.

- Gemini Pro: Gemini Pro is a more advanced version of Gemini AI, which is set to become the driving force behind several Google AI services. Today, it will serve as the foundational support for Bard. Running on Google’s data centers, Gemini Pro has been specifically designed to power the latest version of our AI chatbot, Bard. This advanced technology ensures fast response times and a remarkable ability to understand complex queries.

- Gemini Ultra: Gemini Ultra is a highly powerful Large Language Model (LLM) developed by Google. It is designed to excel at complex tasks and is primarily meant for data centres and enterprise applications. Although not yet widely available, Google claims that Gemini Ultra outperforms other LLMs on most standard tests, exceeding current state-of-the-art results on 30 of the 32 widely used academic benchmarks for LLM research and development. The model is currently in its testing phase and is expected to be released soon.

Each variant of Gemini is designed to cater to different needs and use cases, providing flexibility and scalability for various applications. For more detailed information, I recommend checking Google’s official announcements or technical documentation.

Key Features of Gemini

Gemini is expected to be one of the most powerful AI models ever built. It boasts a range of sophisticated features that set it apart from its predecessors:

- Multimodal Capabilities: Gemini is designed to be multimodal from the ground up. This means it can work with different types of content, such as images or text.

- Human-like Conversations: Gemini is designed to master human-style conversations, language, and content.

- Image Interpretation: Gemini can understand and interpret images.

- Code Generation: Gemini can generate code effectively.

- Data and Analytics: Gemini can drive data and analytics.

- Developer-friendly: Developers can use Gemini to create new AI apps and APIs.

How Does Gemini Work?

Gemini works by extracting all relevant details from each data type (modality) separately when given input with more than one data type, like a piece of text and an image. The AI then looks for important features or patterns in the extracted data using an attention mechanism. This essentially forces it to focus on a specific task.

Gemini was created from the ground up to be multimodal. While many confuse multimodal AI with any AI that can work with different content, such as images or text, for Google, the term means much more.

Availability of Gemini

Gemini AI is now available on Pixel 8 Pro, starting with Gemini Nano. This introduction brings with it enhanced features, including Summarise in the Recorder app and Smart Reply on Gboard. Initially, these features were implemented in WhatsApp. The expansion of Gemini will include several Google products and services, such as Search, Ads, Chrome, and Duet AI.

Gemini has already been integrated into Google Search, resulting in a 40% decrease in latency and a quality improvement.

Starting from December 13th, developers and enterprise users will be able to access Gemini Pro via the Gemini API in Google AI Studio or Google Cloud Vertex AI. In addition, Android developers can use Gemini Nano through AICore, a new feature available in Android 14 on Pixel 8 Pro devices. While Gemini Ultra undergoes trust and safety evaluations, a select group of customers, developers, partners, and safety experts will have access to it for early testing and feedback. It will be made available to a wider audience of developers and enterprise users early next year.

Gemini vs GPT4

At the moment, it is hard to say if Gemini is better than GPT4, but Gemini seems to be more flexible. Also, its ability to work with video and on devices without the Internet gives it an edge. Another factor is that Gemini is now free to use while ChatGPT4 is only for paid users.

Conclusion

Gemini is still in training mode and is expected to be a key rival to OpenAI’s GPT once launched. According to Google, the incoming Gemini AI was built to be multimodal, with a focus on tool and API integrations. This will allow for wider collaborative efforts. It’s also being created to accommodate future developments, such as improved memory and planning. We can expect Gemini to continue to build on all of these features, workplace, security, productivity, and more.