Have you ever imagined what it would be like to create and edit images using only text? Well, now you can. Google recently released Imagen 2, an upgraded text-to-image technology part of Vertex AI in Google Cloud. Imagen 2 boasts of improved image quality and new features that cater to special customers on Vertex AI.

What is Imagen 2?

Imagen 2 is a technology that generates high-quality images from text in a very realistic way. This is achieved by combining the power of large transformer language models, which help the system understand text, with the strength of diffusion models, which enable it to generate images with great detail and accuracy. The result is an impressive level of photorealism and language understanding, making Imagen 2 a highly advanced text-to-image diffusion model.

Imagen 2 allows developers to generate novel images using only a text prompt (text-to-image generation), edit an entire uploaded or generated image with a text prompt, edit only parts of an uploaded or generated image using a mask area they define, upscale existing, generated, or edited images, fine-tune a model with a specific subject (for example, a specific handbag or shoe) for image generation, get text descriptions of images with visual captioning, and get answers to a question about an image with Visual Question Answering (VQA).

How does Imagen 2 work?

Imagen 2 uses a large frozen T5-XXL encoder to encode the input text into embeddings. The embeddings are then fed into a diffusion model that transforms them into realistic images. The diffusion model consists of several layers: an initial noise layer, an encoder-decoder layer, and an output layer. The encoder-decoder layer uses self-attention to capture the semantic information from the text and generate pixel-wise predictions. The output layer applies denoising techniques to remove noise and produce high-quality images.

Benefits of Imagen 2?

Imagen 2 offers several benefits for developers and users who want to create and edit images from text. Some of these benefits are:

- It can generate photorealistic images that match the input text description.

- It can handle complex and diverse requests that involve multiple objects, scenes, styles, and attributes.

- It can edit existing images by changing their content or appearance based on the input text.

- It can upscale low-resolution images without losing quality or details.

- It can fine-tune models for specific domains or tasks that require specialized knowledge or skills.

- It can provide rich information about the generated or edited images through visual captioning and VQA.

Some examples of Imagen 2?

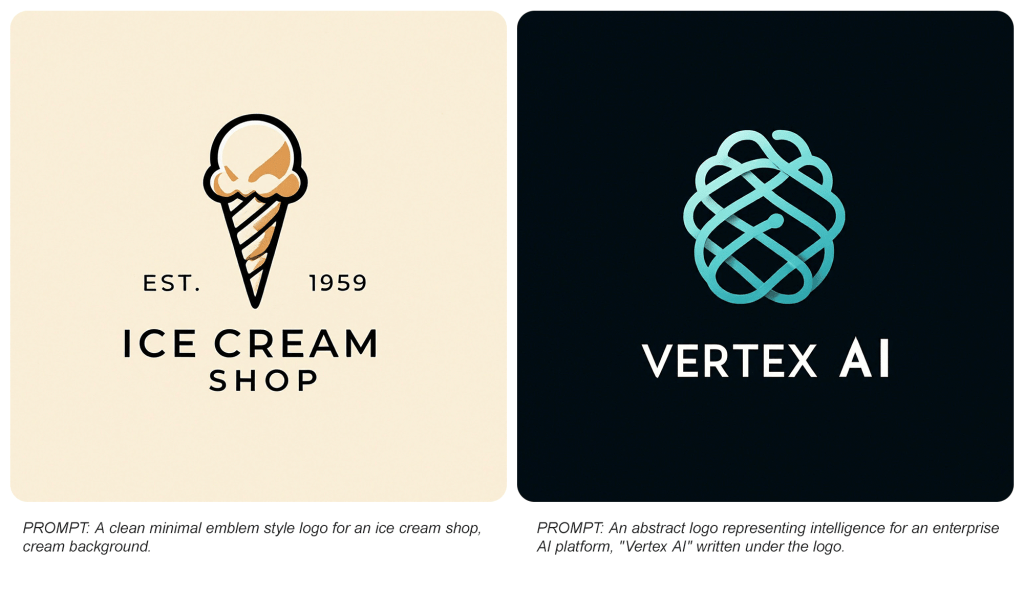

Here are some examples of what Imagen 2 can do:

- Generate novel images using only a text prompt: “A dragon fruit wearing karate belt in the snow”.

- Edit an entire uploaded or generated image with a text prompt: “A photo of a Corgi dog riding a bike in Times Square. It is wearing sunglasses and a beach hat”.

- Edit only parts of an uploaded or generated image using a mask area they define: “A transparent sculpture of a duck made out of glass. The sculpture is in front of a painting of a landscape”.

- Upscale existing, generated, or edited images: “A small cactus wearing a straw hat and neon sunglasses in the Sahara desert”.

- Fine-tune a model with a specific subject (for example, a specific handbag or shoe) for image generation: “A red leather handbag with gold studs”.

- Get text descriptions of images with visual captioning: “A portrait of Albert Einstein smiling”.

- Get answers to questions about an image with Visual Question Answering (VQA): “Who is the person in this picture?”.

Imagen 2 and DALL-E 2

Google Imagen 2 and DALL-E 2 are both text-to-image AI systems that can generate realistic images from text prompts. However, they have some differences in their architectures, capabilities, and performance.

Google Imagen 2 is based on the Transformer T5 Model, which is a large language model that can encode text into embeddings. Imagen 2 uses a diffusion model to transform the embeddings into images gradually. The diffusion model consists of several layers: an initial noise layer, an encoder-decoder layer, and an output layer. The encoder-decoder layer uses self-attention to capture the semantic information from the text and generate pixel-wise predictions. The output layer applies denoising techniques to remove noise and produce high-quality images.

DALL-E 2 is based on the GPT-3 Model, which is another large language model that can generate natural language texts. DALL-E 2 uses a vision transformer to encode text into embeddings. The vision transformer consists of several layers: a self-attention layer, a cross-attention layer, and a feed-forward layer. The self-attention layer captures the semantic information from the text and generates image features. The cross-attention layer aligns the image features with the text features and generates pixel-wise predictions. The feed-forward layer applies non-linear transformations to refine the image features.

According to some benchmarks, Google Imagen 2 outperforms DALL-E 2 in terms of image fidelity, image-text alignment, compositionality, cardinality, spatial relationships, spelling accuracy, and human preference. Google Imagen 2 can also handle complex and diverse requests that involve multiple objects, scenes, styles, and attributes. However, DALL-E 2 has some advantages over Google Imagen 2 in terms of creativity, diversity, originality, and style transfer. DALL-E 2 can also generate images at higher resolutions than Google Imagen.

Conclusion

Imagen 2 is Google’s state-of-the-art generative AI system that lets you create and edit images from text. With Imagen 2, you can transform your imagination into high-quality visual assets in seconds. If you want to learn more about Imagen 2 or try it out yourself, you can visit Vertex AI on Google Cloud or check out some demos online.