A major security issue has surfaced in DeepSeek, a fast-growing artificial intelligence company from China. A critical database was left exposed on the internet, making it vulnerable to cyber threats. The exposure allowed unauthorized access to sensitive data, including secret keys, API secrets, and internal logs.

Database Vulnerability Discovered

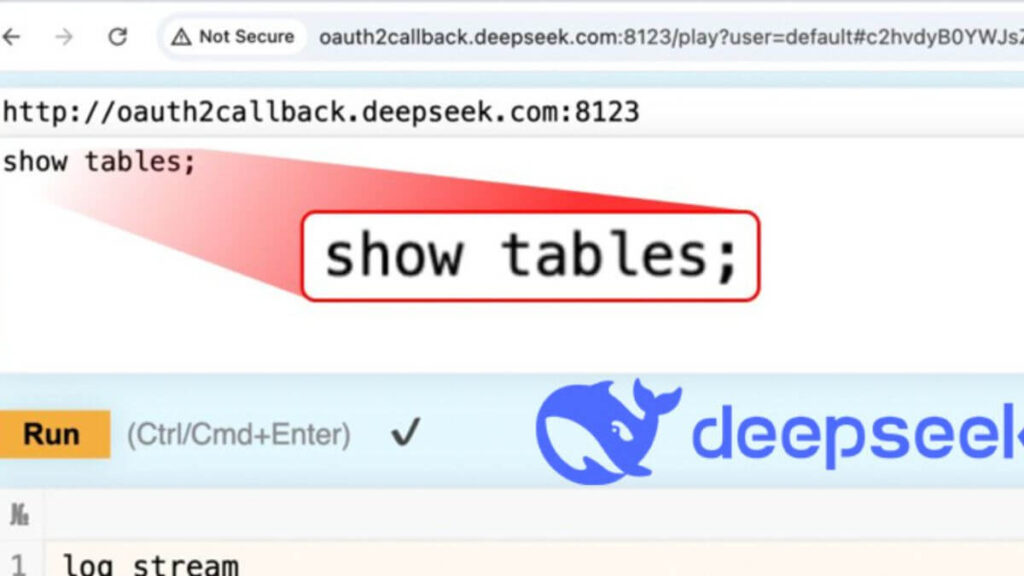

Security researchers from Wiz found the flaw in DeepSeek’s ClickHouse database. This database enabled complete access without authentication. Any attacker could have used ClickHouse’s HTTP interface to execute commands via a web browser.

The affected database was accessible through oauth2callback.deepseek[.]com:9000 and dev.deepseek[.]com:9000. This serious vulnerability raised concerns over data security.

Wiz researcher Gal Nagli warned that AI companies must prioritize security. He highlighted that the biggest threats often come from basic errors like unprotected databases.

What Was Exposed?

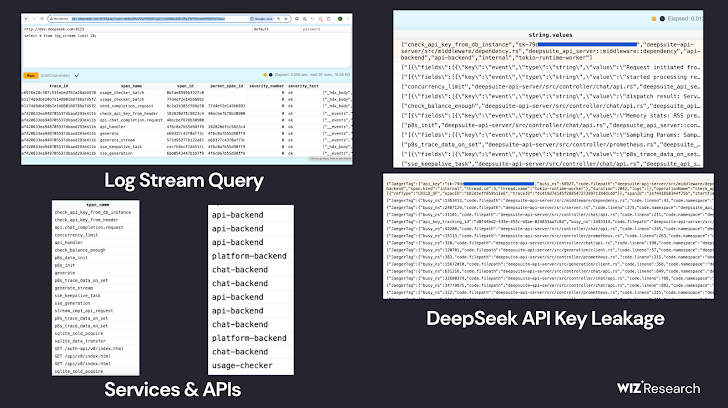

The security lapse revealed more than one million log lines, exposing crucial data such as:

- Chat history logs

- API secret keys

- Backend operational details

- Internal metadata

Hackers could have misused this data to escalate privileges and gain deeper access to DeepSeek’s systems.

How DeepSeek Responded to Security Breach

After Wiz reported the issue, DeepSeek acted quickly to secure its systems. The company released an update on January 29, 2025, confirming that it had fixed the vulnerability. However, it remains unclear whether any malicious actors had already accessed or stolen the exposed data.

DeepSeek also faced growing concerns over its privacy policies. The company’s AI chatbot had already paused new registrations after being targeted by large-scale cyberattacks.

DeepSeek’s Rapid Growth and Security Risks

DeepSeek has gained immense popularity in the AI industry. It offers open-source AI models that challenge leading AI systems like OpenAI. Its R1 reasoning model has been hailed as a major breakthrough in AI development.

The AI chatbot became a top app on Android and iOS in multiple markets. However, with rapid growth comes security risks. Many AI firms focus on speed but often neglect security measures.

Also read | Fake CAPTCHA Spreads Lumma Stealer in Multi-Industry Attacks

Data Privacy Concerns and International Scrutiny

DeepSeek’s security issues have attracted attention from international regulators. Italy’s data protection agency questioned DeepSeek’s data handling practices. Shortly after, DeepSeek’s apps were no longer available in Italy.

Meanwhile, in the United States, concerns over DeepSeek’s Chinese origins have led to national security discussions. Government agencies are now examining the company’s data security and privacy policies.

Did DeepSeek Use OpenAI’s API Without Permission?

A major controversy surrounds DeepSeek’s AI model training. Reports from Bloomberg, The Financial Times, and The Wall Street Journal suggest that DeepSeek may have used OpenAI’s API without permission.

This process, known as distillation, allows AI companies to replicate advanced models by training on their outputs. OpenAI and Microsoft are now investigating whether DeepSeek engaged in such activities.

An OpenAI spokesperson told The Guardian, “We know that groups in China are trying to replicate US AI models using distillation.”

Also read | Malvertising Attack Hijacks Google Ads Users Steals Credentials

Lessons for AI Companies

DeepSeek’s database exposure highlights the critical need for AI security. The rapid development of AI services should not come at the cost of data protection.

Companies must implement strong security measures, including:

- Encrypting sensitive data

- Restricting access to internal databases

- Conducting regular security audits

- Implementing robust authentication mechanisms

Cybersecurity is essential in today’s AI-driven world. Without proper security, even the most advanced AI models remain vulnerable to attacks.

Final Thoughts

DeepSeek Security Breach has plugged the security gap, but this incident raises serious concerns. It shows how quickly security can be compromised if companies fail to protect sensitive information.

AI companies must learn from this mistake and prioritize user data protection. Without strong security, even the most promising AI startups can face serious reputational and legal risks.

DeepSeek’s future remains uncertain as regulators and competitors continue to scrutinize its practices. The company must take strong security measures to regain trust and ensure long-term success.